The fine-tuned model Llama Chat leverages publicly available instruction datasets and over 1 million human annotations Llama 2 models are trained on. Clone on GitHub Customize Llamas personality by clicking the settings button I can explain concepts write poems and code solve logic puzzles or even name your pets. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters This is the repository for the 70B fine-tuned model optimized for. In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. ..

This release includes model weights and starting code for pretrained and fine-tuned Llama language models ranging from 7B to 70B parameters. Code Llama has been released with the same permissive community license as Llama 2 and is available for commercial use. Code Llama is a family of state-of-the-art open-access versions of Llama 2 specialized on code tasks and were excited to release. To download Llama 2 model artifacts from Kaggle you must first request a using the same email address as your Kaggle account. Code Llama is a code generation model built on Llama 2 trained on 500B tokens of code It supports common programming languages being used..

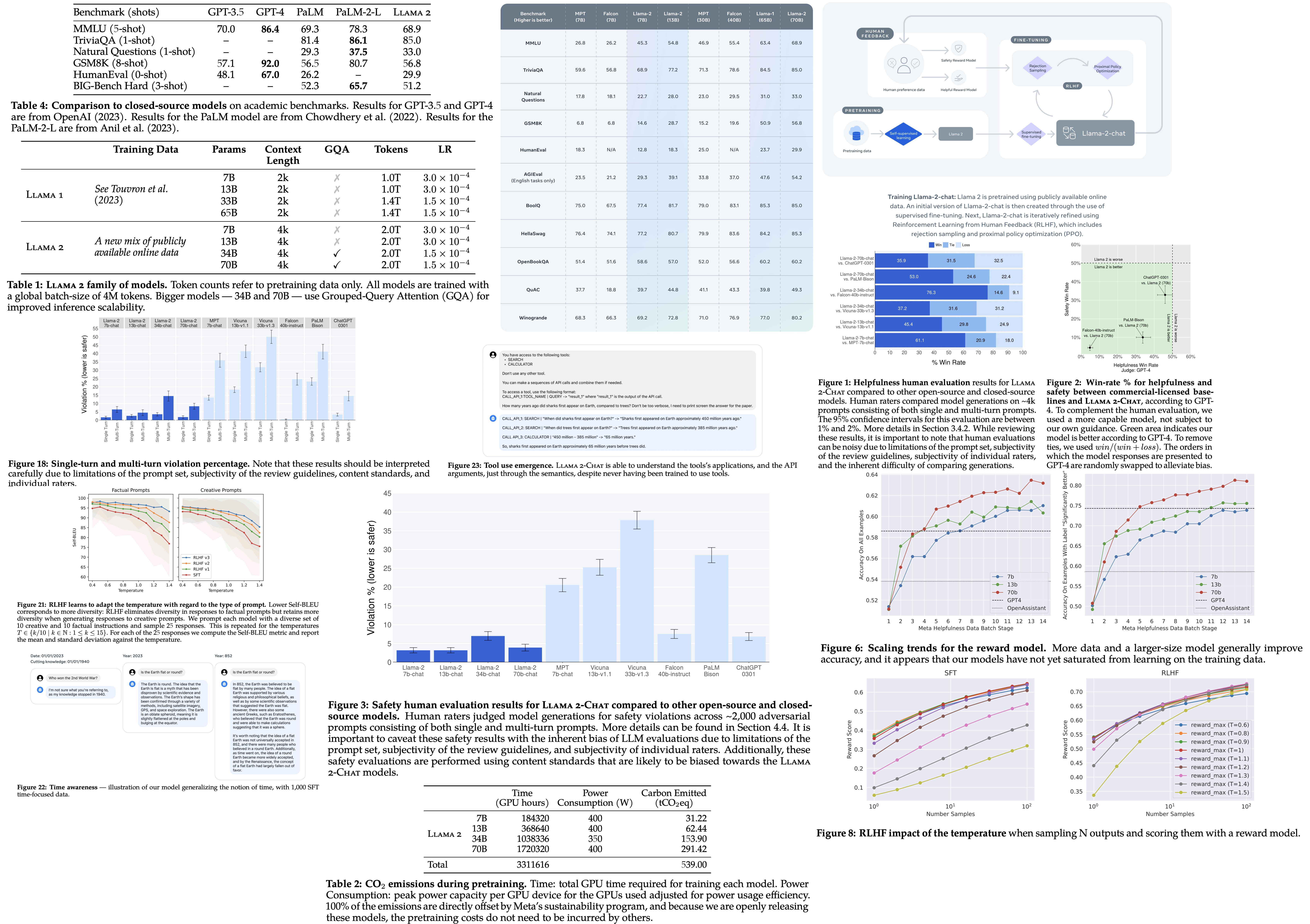

In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. . We have a broad range of supporters around the world who believe in our open approach to todays AI companies that have given early feedback and are excited to build with Llama 2 cloud. Llama 2 models are trained on 2 trillion tokens and have double the context length of Llama 1 Llama Chat models have additionally been trained on over 1 million new human annotations. Llama 2 a product of Meta represents the latest advancement in open-source large language models LLMs It has been trained on a massive dataset of 2 trillion tokens which is a. In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. Welcome to the official Hugging Face organization for Llama 2 models from Meta In order to access models here please visit the Meta website and accept our license terms. July 18 2023 Takeaways Today were introducing the availability of Llama 2 the next generation of our open source large language model Llama 2 is free for research and commercial use. We introduce LLaMA a collection of founda- tion language models ranging from 7B to 65B parameters We train our models on trillions of tokens and show that it is possible to train state-of-the. We introduce LLaMA a collection of foundation language models ranging from 7B to 65B parameters We train our models on trillions of tokens and show that it is possible to train. Meta says it made an effort to remove data from certain sites known to contain a high volume of personal information about private individuals in the Llama 2 research. As part of Metas commitment to open science today we are publicly releasing LLaMA Large Language Model Meta AI a state-of-the-art foundational large language model. Llama 2 uses supervised fine-tuning reinforcement learning with human feedback and a novel technique called Ghost Attention GAtt which according to Metas paper enables. Getting started with Llama 2 Once you have this model you can either deploy it on a Deep Learning AMI image that has both Pytorch and Cuda installed or create your own EC2 instance with GPUs and. Meta claims that Llama 2-chat is as safe or safer than other models based on evaluation by human raters using 2000 adversarial prompts as discussed in Metas Llama 2 paper. In particular LLaMA-13B outperforms GPT-3 175B on most benchmarks and LLaMA-65B is competitive with the best models Chinchilla70B and PaLM-540B. 7 min read Aug 25 2023 Meta recently launched LLama-2 accompanied by a huge paper I finally got the chance to read through the paper which includes substantial details on data. In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. The LLaMA-2 paper describes the architecture in good detail to help data scientists recreate fine-tune the models Unlike OpenAI papers where you have to deduce it. Llama 2 is a family of state-of-the-art open-access large language models released by Meta today and were excited to fully support the launch with comprehensive integration. Llama 2 Responsible Use Guide Resources and best practices for responsible development for products powered by large language models Contents Open Innovation How to use. Customize Llamas personality by clicking the settings button I can explain concepts write poems and code solve logic puzzles or even name your pets Send me a message or upload an. Llama 2 is a cutting-edge foundation model by Meta that offers improved scalability and versatility for a wide range of generative AI tasks Users have reported that Llama 2 is capable. Wells Fargo has deployed open-source LLM-driven including Metas Llama 2 model for some internal uses Wells Fargo CIO Chintan Mehta mentioned in an. Llama 2 was trained on 40 more data Llama2 has double the context length Llama2 was fine-tuned for helpfulness and safety Please review the research paper and model cards llama 2 model..

Discover how to run Llama 2 an advanced large language model on your own machine With up to 70B parameters and 4k token context length its free and open-source for research. The Models or LLMs API can be used to easily connect to all popular LLMs such as Hugging Face or Replicate where all types of Llama 2 models are hosted The Prompts API implements the useful. Is it possible to host the LLaMA 2 model locally on my computer or a hosting service and then access that model using API calls just like we do using openAIs API. Ollama sets itself up as a local server on port 11434 We can do a quick curl command to check that the API is responding Here is a non-streaming that is not interactive REST call via. Navigate to the directory where you want to clone the llama2 repository Lets call this directory llama2 3 Clone the llama2 repository using the following..

Komentar